Merge Multiple JSON Files

Overview

Sometimes it's necessary to merge multiple structured files into a single file, for example prior to a bulk SQL execution. This guide will explain how to use the MergeRecord processor to do so. For this project we utilized GenerateRecord to create many json documents containing auto-generated First and Last name attributes, then split that result set into individual JSON files.

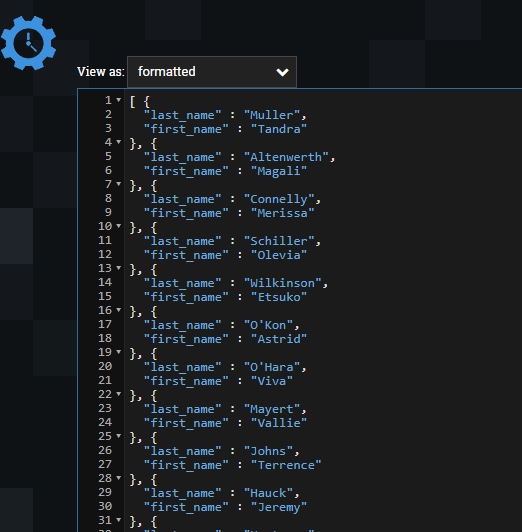

The content of each file looks something like:

{

"first_name" : "Krystina",

"last_name" : "Carroll"

}

Processor Configuration

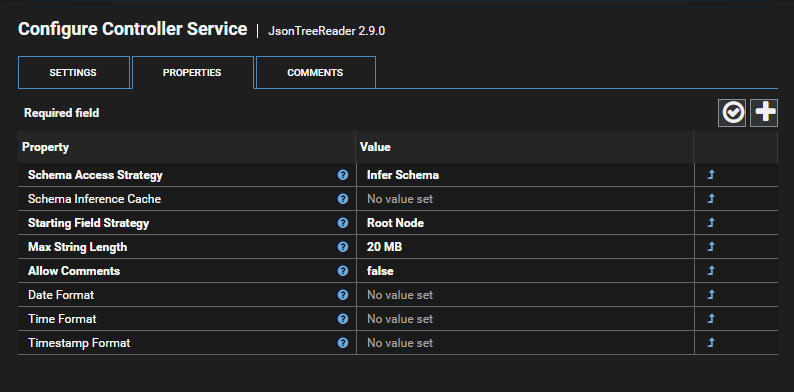

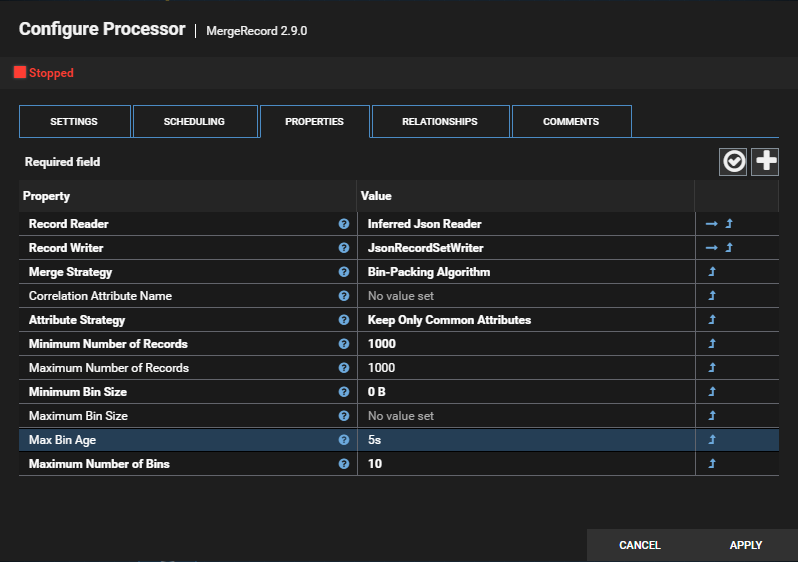

MergeRecord has two Controller Service dependencies in order to function, a Record Reader and a Record Writer. Since the input format if the JSON objects are flat we can use the default values when creating each Controller Service. For the Reader we'll need a new JsonTreeReader service. Since this component will most likely be re-used across multiple integration workflows we'll create it scoped to the Root canvas and name it 'Inferred Json Reader'.

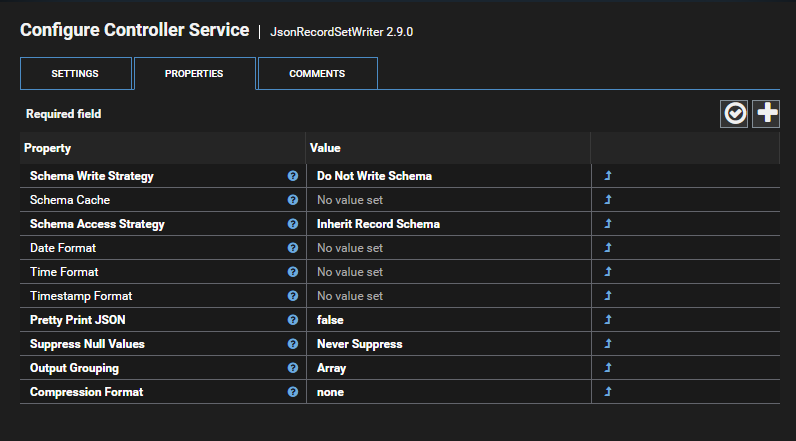

We also need a JsonRecordSetWriter which will be used to create the JSON output. This Controller Service should also be created at the Root canvas to allow for re-use across different flows. As above the default values are fine.

Ensure that both Controller Services are enabled, then configure the MergeRecord processor to use these services. Additionall the Minimum Number of Records and

Max Bin Age properties will need to be configured.

In the example below Minimum Number of Records is set to 1000 and Max Bin Age is 5s. This tells the processor to release the merged file

once it has reached a size of 1000 or 5 seconds have elapsed since it received a file. Note: If Max Bin Age is left empty the processor

will wait indefinitley until 1000 entries are ready to be merged.

Result

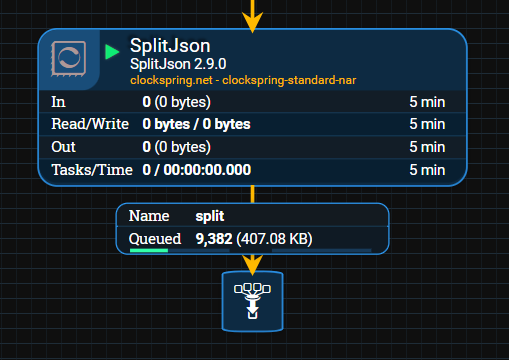

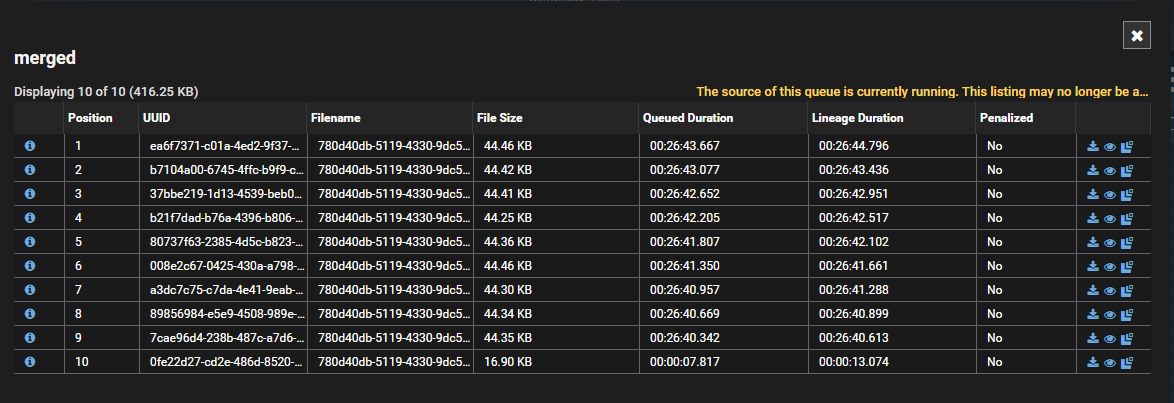

Once the merge is complete the result will be 10 flowfiles. The first nine flowfiles will contain 1000 elements with the tenth containing the remaining 382:

The content of each flowfile is now a JSON array: